ThinkSound

🌐 English | Simplified Chinese | Traditional Chinese | Spanish | French | Japanese

If you find this project useful,

a star ⭐ on GitHub would be greatly appreciated!

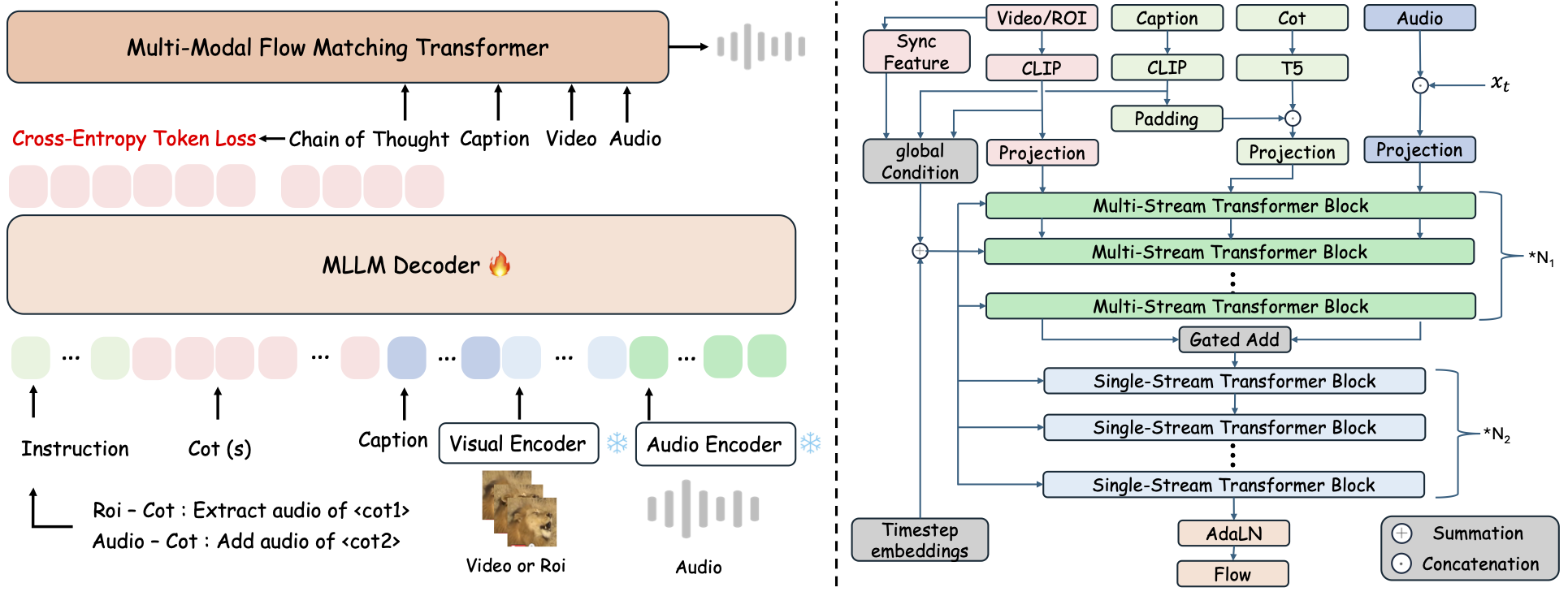

ThinkSound is a unified Any2Audio generation framework with flow matching guided by Chain-of-Thought (CoT) reasoning.

PyTorch implementation for multimodal audio generation and editing: generate or edit audio from video, text, and audio, powered by step-by-step reasoning from Multimodal Large Language Models (MLLMs).

📰 News

- 2025.11.25 🔥Online PrismAudio Demo is live - try it now!

- 2025.11.25 🔥PrismAudio paper released on arXiv, the first multi-dimensional CoT-RL framework for Video-to-Audio Generation!

- 2025.09.19 🎉 ThinkSound has been accepted to the NeurIPS 2025 Main Conference!

- 2025.09.01 Our AudioCoT dataset is now open-sourced and available on Hugging Face!

- 2025.07.17 🧠 Finetuning enabled: training and finetuning code is now publicly available, along with clear usage instructions to help you customize and extend ThinkSound with your own data.

- 2025.07.15 📦 Simplified installation and usability: dependencies on PyPI for easy cross-platform setup; Windows

.batscripts automate environment creation and script running. - 2025.07.08 🔧 Major update: model lightweighted and optimized memory and GPU usage, now supports high-throughput audio generation at scale!

- 2025.07.01 Online demo on Hugging Face Spaces and ModelScope for interactive experience!

- 2025.07.01 Released inference scripts and web interface;

- 2025.06 ThinkSound paper released on arXiv!

- 2025.06 Online Demo is live - try it now!

🚀 Features

- Any2Audio: Generate audio from arbitrary modalities — video, text, audio, or their combinations.

- Video-to-Audio SOTA: Achieves state-of-the-art results on multiple V2A benchmarks.

- CoT-Driven Reasoning: Chain-of-Thought reasoning for compositional and controllable audio generation via MLLMs.

- Interactive Object-centric Editing: Refine or edit specific sound events by clicking on visual objects or using text instructions.

- Unified Framework: One foundation model supports generation, editing, and interactive workflow.

✨ Method Overview

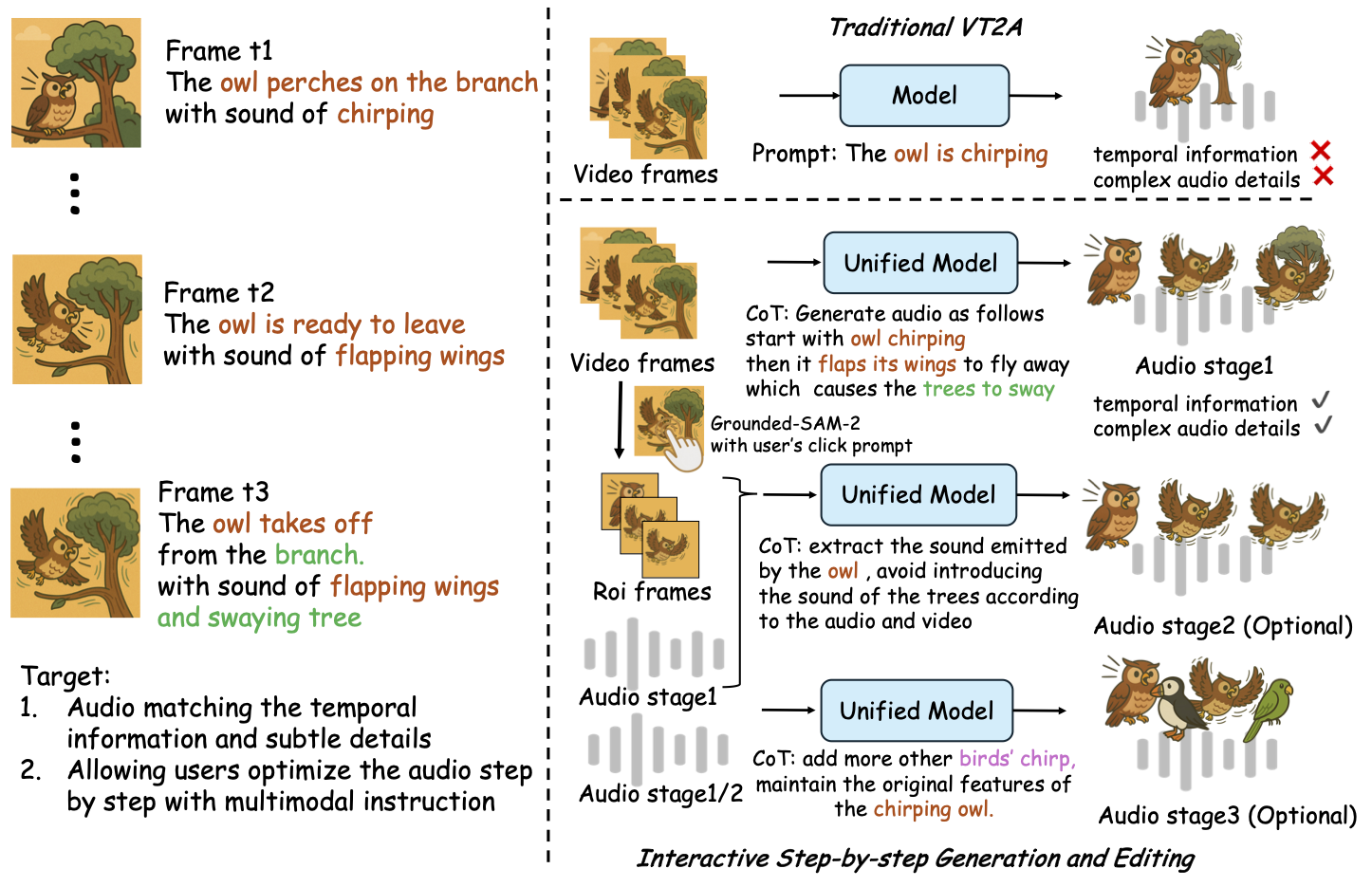

ThinkSound decomposes audio generation and editing into three interactive stages, all guided by MLLM-based Chain-of-Thought (CoT) reasoning:

- Foley Generation: Generate foundational, semantically and temporally aligned soundscapes from video.

- Object-Centric Refinement: Refine or add sounds for user-specified objects via clicks or regions in the video.

- Targeted Audio Editing: Modify generated audio using high-level natural language instructions.

⚡ Quick Start

Environment Preparation:

git clone https://github.com/liuhuadai/ThinkSound.git

cd ThinkSound

conda create -n thinksound python=3.10

conda activate thinksound

pip install thinksound

conda install -y -c conda-forge 'ffmpeg<7'

Download pretrained weights https://huggingface.co/liuhuadai/ThinkSound to Directory ckpts/

model weights can be also downloaded from https://www.modelscope.cn/models/iic/ThinkSound

git lfs install

git clone https://huggingface.co/liuhuadai/ThinkSound ckpts

To improve inference and training speed, you may optionally install a FlashAttention backend compatible with your system and PyTorch version.

✅ Windows Tip:

Windows users can simply run setup_windows.bat (or double-click it) to automatically create the conda environment, install all dependencies (including FFmpeg), and download the pretrained model — no manual setup required.

Make surecondaandgitare installed and available in your system PATH before running the script.

▶️ Run the Demo

#### Linux/macOS

chmod +x scripts/demo.sh

./scripts/demo.sh <CoT description> [use-half]</code></pre>

#### <strong>Windows</strong></p><p>You can use the provided <code>.bat</code> script instead:</p><pre><code class="language-bash">.\scripts\demo.bat <path-to-your-demo-video> <title> <CoT description> [use-half]</code></pre>

<strong>Note:</strong></p><ul><li><code><path-to-your-demo-video></code>: The path to a single video</li>

<li><code>[use-half]</code> (optional): Add use-half at the end to enable half precision feature extraction.</li></p><p></ul>---</p><h3>📦 Batch Inference</h3></p><p>#### <strong>Linux/macOS</strong></p><pre><code class="language-bash">chmod +x scripts/eval_batch.sh

./scripts/eval_batch.sh <video_path> <csv_path> <save_path (optional)> [use-half]</code></pre>

#### <strong>Windows</strong></p><p>Use the corresponding <code>.bat</code> script:</p><pre><code class="language-bash">.\scripts\eval_batch.bat <video_path> <csv_path> <save_path (optional)> [use-half]</code></pre>

<strong>Note:</strong></p><ul><li><code><video_path></code>: Path to the root directory containing all .mp4 videos to be processed (all videos must be of equal duration).</li>

<li><code><csv_path></code>: A CSV file with text prompts for each video (see <code>demo_test.csv</code> for format).</li>

<li><code><save_path></code> (optional): Where to save generated audio. Defaults to <code>results/features</code>.</li>

<li><code>[use-half]</code> (optional): Add use-half at the end to enable half precision feature extraction.</li></p><p></ul>---</p><h3>Web Interface Usage</h3></p><p>For an interactive experience, launch the Gradio web interface:</p><pre><code class="language-bash">python app.py</code></pre></p><h2>🏋️ Train the Model</h2></p><p>See <a href="https://raw.githubusercontent.com/FunAudioLLM/ThinkSound/master/docs/Training.md" target="_blank" rel="noopener noreferrer"><code>Training.md</code></a></p><hr></p><h2>📝 TODO & Future Plans</h2>

<ul><li>- [ ] Release a more powerful foundation model covering multiple domains to provide more engaging and immersive foley creation</li>

<li>- [ ] Add support for additional modalities and downstream tasks</li>

<li>- [ ] Release models at different scales</li>

<li>- [x] Open-source AudioCoT dataset and automated pipeline</li>

<li>- [x] Release training scripts for ThinkSound models</li>

<li>- [x] A beginner-friendly Windows quick-start README</li>

</ul>---</p><h2>📄 License</h2></p><p>This project is released under the Apache 2.0 License.</p><blockquote><strong>Note:</strong></blockquote>

<blockquote>The code, models, and dataset are <strong>for research and educational purposes only</strong>.</blockquote>

<blockquote><strong>Commercial use is NOT permitted.</strong></blockquote>

<blockquote>For commercial licensing, please contact the authors.</blockquote></p><p><strong>📦 Third-Party Components</strong></p><ul><li><strong>Stable Audio Open VAE</strong> (by Stability AI):</li>

</ul>This repository includes a fine-tuned VAE from <a href="https://huggingface.co/stabilityai/stable-audio-open-1.0/" target="_blank" rel="noopener noreferrer">Stable Audio Open</a>, licensed under the <a href="https://raw.githubusercontent.com/FunAudioLLM/ThinkSound/master/./third_party/LICENSE_StabilityAI.md" target="_blank" rel="noopener noreferrer">Stability AI Community License</a>.

<strong>Commercial use and redistribution require prior permission from Stability AI.</strong></p><ul><li>📘 <strong>All other code and models</strong> are released under the Apache License 2.0.</li></p><p></ul>---</p><h2>Acknowledgements</h2></p><p>Many thanks to:</p><ul><li><strong>stable-audio-tools</strong> (by Stability AI):</li>

</ul>For providing an easy-to-use framework for audio generation, as well as the VAE module and weights.

<ul><li><strong>MMAudio</strong>:</li>

</ul>For the implementation of the MM-DiT backbone in the audio domain.</p><hr></p><h2>📖 Citation</h2></p><p>If you find ThinkSound useful in your research or work, please cite our paper:</p><pre><code class="language-bibtex">@misc{liu2025thinksoundchainofthoughtreasoningmultimodal,

title={ThinkSound: Chain-of-Thought Reasoning in Multimodal Large Language Models for Audio Generation and Editing},

author={Huadai Liu and Jialei Wang and Kaicheng Luo and Wen Wang and Qian Chen and Zhou Zhao and Wei Xue},

year={2025},

eprint={2506.21448},

archivePrefix={arXiv},

primaryClass={eess.AS},

url={https://arxiv.org/abs/2506.21448},

}</code></pre></p><hr></p><h2>📬 Contact</h2></p><p>

✨ Feel free to <a href="https://github.com/liuhuadai/ThinkSound/issues" target="_blank" rel="noopener noreferrer">open an issue</a> or contact us via email (<a href="https://raw.githubusercontent.com/FunAudioLLM/ThinkSound/master/mailto:liuhuadai@zju.edu.cn" target="_blank" rel="noopener noreferrer">liuhuadai@zju.edu.cn</a>) if you have any questions or suggestions!</p><p>

---

Tranlated By <a href="https://github.com/OpenAiTx/OpenAiTx" target="_blank" rel="noopener noreferrer">Open Ai Tx</a> | Last indexed: 2026-01-07

---</p>

</div>

<div class="original-link">

<strong>Original README:</strong> <a href="https://raw.githubusercontent.com/FunAudioLLM/ThinkSound/master/README.md" target="_blank" rel="noopener noreferrer">View on GitHub</a>

</div>

</div>

<div class="footer">

<p>Translated by <a href="https://github.com/OpenAiTx/OpenAiTx" target="_blank" rel="noopener noreferrer">OpenAiTx</a> |

Last updated: 2026-01-07

</div>

</body>

</html>